Query sargability and wildcard LIKE performance

We have all got used to using search. So much so that the verb 'to google' was added to the Oxford English Dictionary on the 15th June 2006. Users are used to blazing fast results and because of this application designers often propose search features where the user can enter any string and search across multiple fields to get their results . These search facilities often don't give any context to the search detailing whether the user is looking for a name, address, phone number etc. On top of this 'requirement' (I put this in quotes as it often isn't a requirement) the customer/user expects lightning fast results. This can often be achieved for small datasets but for large data sets this can become a problem, especially when trying to achieve performance at scale.

To help illustrate my point I started by creating a large dataset. Thanks to the UK Government's project to open up almost all non-personal data for free re-use, producing a data set should be fairly straightforward. After a quick browse around for a suitable dataset I settled on the Basic Company Data dataset. This dataset contains basic information for every live company on the government's company register and at the time of download was accurate upto 1st Jan 2012.

Building a dataset

To build my dataset I downloaded four zip files containing some pretty large CSV files. A few lines of Python later I had these files importing into a flat database table on Microsoft SQL Server 2008. I probably didn't write the most efficient import code but I wasn't too bothered as this was going to be a one time exercise and it was possible to leave it cooking over the weekend. So after approximately 20-30 hours all ~2.8M rows had been imported.

The basic data set comes with around 28 fields including

- Company Name

- Company Number

- Address information

- Date of Incorporation

- Last Accounts Date

- Next Accounts Date

- etc

I imported more columns then I needed for this exercise, but given the time it was likely to take to import I thought I might as well import the entire dataset.

Back to the point.

Ok so we have all our data in a table, lets storyboard a situation.

A user comes and uses our 'Superduper Company Search' application and wants to find a company he/she has heard about. Our user knows that the company is called 'Old' something. The application interface has a simple 'Search' input box. We don't know if the user wants companies called 'Old*' or companies called '*old*'. Because the way the user interface is designed we need to assume the latter to ensure we get all the results.

OK, so how would we go about doing that in SQL?

'LIKE' with wildcards (%).

To do our search the following SQL should do the trick.

SELECT * FROM [companies].[dbo].[companies] WHERE CompanyName LIKE '%old%'

So I'll execute that (and go and make a coffee....)

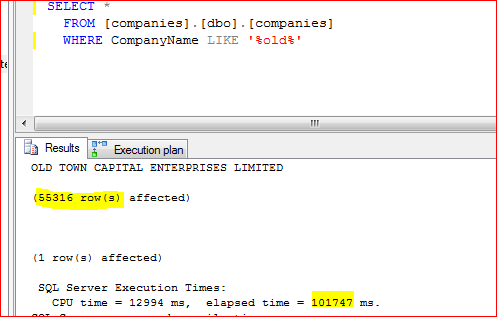

Well 12.94 seconds of CPU time (1 minute 41 seconds including data fetch) later we get our results. Ouch, that was hardly quick. Now the more observant of you will be thinking "You don't have any indexes set up". OK so lets add an index to that column.

USE [companies]

GO

CREATE NONCLUSTERED INDEX [idx_CompanyName] ON [dbo].[companies]

(

[CompanyName] ASC

) ON [PRIMARY]

GO

Running the query again

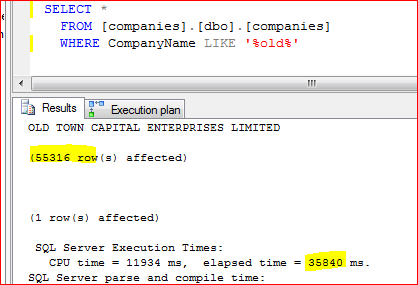

Okay, if we run the same query things are quicker, 11 seconds of CPU time (35.8 secs elapsed if we add the I/O time fetching the records, this improvements here are due to the caching of data in memory). But interestingly they aren't that much quicker, CPU time is almost the same. Not really as quick as we hoped. Lets see why this was so disappointing

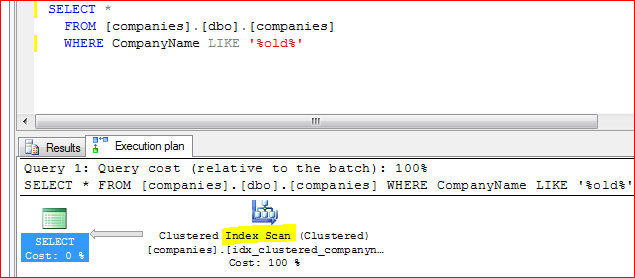

So you can see here that the index we created isn't being used. What is going on is that the clustered index which is used for the primary key for this table (in this case is the unique company number) is being 'scanned'. This is because the index being used isn't doesn't use the field we are performing our 'LIKE' on. An index scan essentially means that the entire table is being scanned with the record lookups for every row being acheived via the index. As you can imagine this is quite resource intensive. If the whole table doesn't fit in RAM then this will create lots of paging from disk. Making the whole process even slower. If the index was being used we would see an index seek, the actual search would be performed via the index itself.

So why wasn't our index on the CompanyName column being used?

The reason our index isn't being used is because the query isn't Sargable i.e. Search Argument Able. A query which is sargable is one where the database engine can use an index to perform the query. A non-sargable query often means that a scan (table scan or index scan) must be performed. So why is LIKE '%old%' not a sargable query?

Lets take a step back. Imagine our dataset is more simplistic, say the English Dictionary. Imagine our query is find all the words in the dictionary that contain the word 'old' i.e. LIKE '%old%'. To answer this question you would need to start at page 1 and go through every word making a note of each word as you find one that contains 'old'. This is essentially an index scan. If I changed the question to give me all the words with 'old' at the begining i.e. LIKE 'old%', then the question becomes much easier to answer. You would 'seek' to the world 'old' in the dictionary and then perform a scan until you hit the first word that didn't begin 'old' which might be the word 'outsold' (according to /usr/share/dict/words it is, the OED would probably suggest otherwise). You answered this question considerably quicker because we have made our query sargable, i.e we were able to use the index to get the answer, in this case the 'index' is the natural sort order/form factor of a dictionary in book form.

So lets answer our question again but this time with the sargable LIKE 'old%' query.

So you can see this query was massively quicker, only 62ms of CPU time, the rest of the time will data I/O time fetching the actual rows from the database. Looking at the execution plan confirms this.

100% (roughly) was spent fetching the rows, and the index seek took virtually no time at all.

But you aren't asking the same question!

Unfortunately by removing the leading wildcard we have essentially changed the question. The point I am trying to make is you need to be fairly sure that you need the leading wildcard to answer your question. Because using it is not going to scale. If you really need this facility then you could look into using a search platform such as Apache-Solr or building your own indexing/search application using Lucene, but these come with their own set of challenges. We might be able to get around the wildcard requirement by changing the user interface to 'Search for companies begining with : '. This isn't always possible. As I mentioned earlier its worth investigating whether full willcard search is a geniune requirement. If it isn't then make your interface sypathetic to the underlying database. Then maybe later when/if the requirement arises you can invest more time/effort into doing full wildcard searches using methods like I suggest.

What else could make my query non-sargable?

The basic rules of thumb are to

- Make sure you have an index for the fields you are querying against.

- Avoid functions on the left handside of a query.

e.g.

SELECT * FROM [companies].[dbo].[companies] WHERE YEAR(DateOfIncorporation) = 2013

instead use

SELECT * FROM [companies].[dbo].[companies] WHERE DateOfIncorporation > '31-12-2012' AND DateOfIncorporation < '01-01-2014'

- Avoid using non-sargable predicates

e.g.

Imagine one of the company fields is the phone area code as a varchar (because it requires the preceeding 0).

SELECT * FROM [companies].[dbo].[companies] WHERE AreaCode = 01792

instead use

SELECT * FROM [companies].[dbo].[companies] WHERE AreaCode = '01792'

Because int has a lower precedence than varchar the int isn't converted to a string and then compared against the index. Instead a index scan is forced.

I hope this helps you improve the performance of some of your queries.